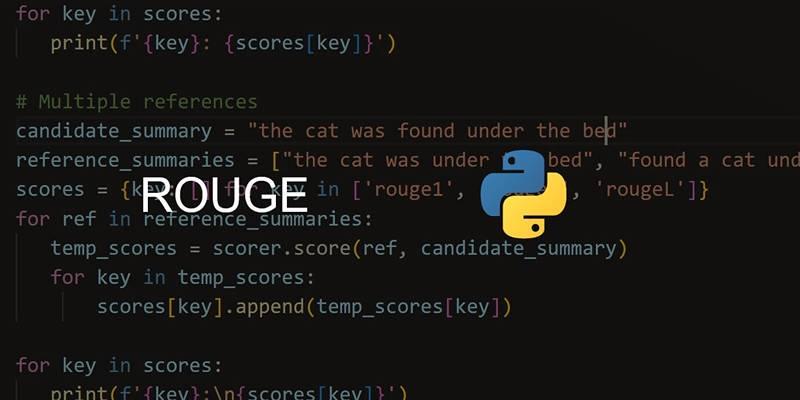

ROUGE is a collection of metrics designed to measure the similarity between two pieces of text. One of the texts is typically generated by a machine, such as an AI writing model, and the other is a human-written reference text. The main goal of ROUGE is to provide a score that reflects how much the machine-generated output overlaps with the reference content. It does not judge the grammar or coherence directly. Instead, it checks how much of the essential content from the human-written text appears in the machine’s version.

Why ROUGE Matters in NLP

The need for accurate evaluation tools in NLP cannot be overstated. While human reviewers are invaluable, their time is limited and subjective judgments can vary. ROUGE offers a quantitative method to assess quality, enabling developers and data scientists to:

- Track improvements in text generation models

- Benchmark performance across different algorithms

- Optimize outputs for tasks like summarization, translation, and question-answering

For machine-generated summaries, chatbot replies, or automated reports, ROUGE acts as a baseline validator before human review.

Core ROUGE Metrics Explained

ROUGE includes several scoring systems, each designed to evaluate different aspects of text similarity. Understanding each of these helps in selecting the right approach based on the task.

ROUGE-N

It checks how many n-grams (sequences of "N" words) are in both the produced text and the reference text. Common versions include:

- ROUGE-1: Evaluates unigram (single-word) overlap

- ROUGE-2: Assesses bigram (two-word phrase) overlap

These scores show how many exact words or short phrases in the reference text also appear in the AI-generated version. A high ROUGE-1 or ROUGE-2 score suggests that the generated text covers similar content to the human-written version.

ROUGE-L

ROUGE-L is based on the Longest Common Subsequence (LCS). It captures the sequence of words that appear in both texts without necessarily being next to each other. This metric focuses more on the structure and flow of content rather than just isolated words. ROUGE-L is especially useful when the AI maintains the general structure of the reference but uses different vocabulary.

ROUGE-S

ROUGE-S (also called ROUGE-Skip) measures skip-bigrams—word pairs that are in the same order in both texts but may be separated by other words. This approach detects similarity in meaning even when the wording is not exact. For instance, in machine translation or chatbot responses, ROUGE-S can help identify semantic alignment without relying on rigid structure.

How ROUGE Scores Are Calculated

ROUGE scores are typically presented in three parts:

- Precision: The percentage of machine-generated content that matches the reference.

- Recall: The percentage of the reference content found in the machine-generated output.

- F1 Score: The harmonic mean of precision and recall, offering a balanced score.

A higher ROUGE recall score means the machine covered more of the important points. A high precision score suggests the generated content is accurate and not overly verbose. The F1 score provides a fair balance between the two.

Here’s an example for context:

- Reference: “The dog chased the ball across the yard.”

- Generated: “The dog chased the ball.”

While the sentence structure differs, the core meaning is similar. ROUGE metrics will reward this overlap, especially in ROUGE-L and ROUGE-S.

ROUGE in Action: Where It’s Used

ROUGE is especially popular in text summarization, but its applications go far beyond that. Some of the most common use cases include:

- Automatic summarization: Comparing AI summaries with human-written ones to ensure critical points are captured.

- Machine translation: Checking if the translated version conveys the same information as the reference.

- Content generation: Verifying that blogs, descriptions, or product content match the intent of the original.

- Dialogue systems: Evaluating chatbot responses to ensure they are relevant and context-aware.

In each of these cases, ROUGE serves as a first step in content evaluation, often followed by human quality checks.

Strengths of ROUGE

ROUGE remains a preferred choice in NLP for several reasons:

- Simplicity: Easy to understand and interpret

- Efficiency: Fast computation, ideal for large datasets

- Community support: Widely used and supported by NLP tools like Hugging Face and NLTK

Researchers appreciate ROUGE for its transparency and ability to generate reproducible results. It's standard for many benchmark datasets, such as CNN/Daily Mail, for summarization.

Limitations and Criticism

Despite its usefulness, ROUGE is not without flaws. One of the most common criticisms is that it relies heavily on surface-level comparisons. It does not account for synonyms, paraphrasing, or deeper meaning.

Some of the known limitations include:

- May reward exact word matches over meaningful variations

- Ignores grammar, tone, and coherence

- Can give high scores to irrelevant or overly long outputs

- Doesn't detect factual errors or hallucinations in the generated text

Due to these limitations, ROUGE is best used alongside human evaluation or advanced semantic metrics such as BERTScore or BLEURT.

Tools That Support ROUGE Evaluation

Developers don’t need to calculate ROUGE manually. Multiple libraries and platforms now offer ready-to-use ROUGE scoring systems:

- Python rouge-score package by Google

- Hugging Face Datasets – includes built-in ROUGE metric

- NLTK and SpaCy toolkits – allow text preprocessing for better scoring

- ROUGE Toolkit (ROUGE-1.5.5) – a Perl-based original implementation still used in some research

These tools make it easier for teams to integrate ROUGE into testing pipelines or training validation.

Best Practices When Using ROUGE

To make the most of ROUGE evaluation, developers and researchers can follow these simple tips:

- Combine ROUGE-1, ROUGE-2, and ROUGE-L for more complete evaluation.

- Use F1 scores instead of relying solely on precision or recall.

- Test ROUGE on multiple outputs and reference texts for better generalization.

- Always consider using it in tandem with semantic evaluation for high-stakes outputs.

Conclusion

ROUGE continues to be a foundational metric in the evaluation of machine-generated text. It offers a fast, objective, and reproducible way to measure how much of the important information is captured by an AI model. While it is not perfect, especially when it comes to evaluating meaning or creativity, its simplicity and efficiency make it a go-to tool for many NLP projects. By understanding what ROUGE can and can’t do, researchers and developers can use it more effectively—always as a guide, not a judge, for AI-generated content. In a world where machines write more and more, tools like ROUGE will remain key to keeping quality in check.